Average entropy of a discrete probability distribution (part 1)

with respect to its dimensionality

In this blogpost I will present my own little research in maths: the search for an expression of the expected value of an n-dimensional discrete probability distribution.

Average entropy

The story begins while I was learning Information Theory at my uni. We talked about the entropy of a binary random variable. A binary random variable is a variable which only ever has two outcomes, 0 or 1.1. The pmf of a Bernoulli variable is

which reads as “the probability of a random variable to have a value of 1 is and to have a value of 0 is ”.

The entropy of a Bernoulli variable is then2

which looks like this:

Now for the question: what is the average entropy of a Bernoulli variable? If we were to pick a lot of random , calculated the entropy and averaged all of them, what number would we get as a result? We could just integrate over all ’s, and divide by the size of the space of . To borrow some physics intuition, to calculate the average density of an object, you first calculate the mass of an object by integration, and then divide by the size of the object (i.e. its volume). Here the “size” of the space happens to be . Anyways:

So the average entropy turns out to be . If we were to pick at random afew times and calculate the average, we should get something in the vicinity of . Cute.

Two’s a company, three’s a crowd

Let’s move a step up. What if we picked a random 3-dimensional “Bernoulli” and calculated it’s entropy. What is the average (or expected) entropy of a random 3-dimensional variable?

First, let’s define some stuff. 3-d Bernoulli variable does not exists, as the term Bernoulli is reserved for 2-dimensional case excusively. Higher dimensional random variables are caled Multinoulli, presumably because someone was feeling particularly creative that day. It’s entropy is calculated with:

There is constraint that and . Particular instance of this formula for the 3-dimensional case is:

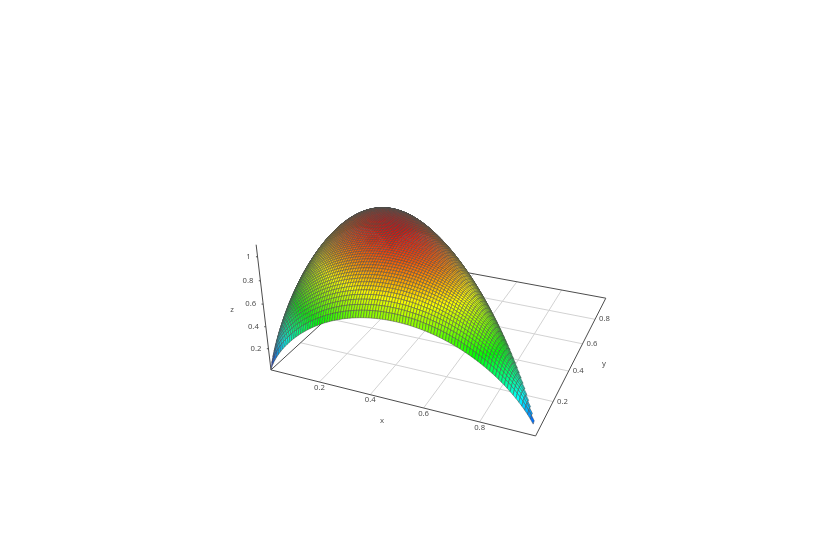

which looks like this:

How do we calculate the average entropy of this? Using the same process as above: integrate the function and divide by the “size” of the function’s domain. Here, the domain is a 2D triangle4 determined by the lines , , . This triangle is exactly one half of the unit square, so it has to have an area of . The average entropy of a 3-dimensional multinoulli variable is:

Okay, no visible pattern so far. To quote data scientists, “More data is needed!”. To dimension no.4

Three is a crowd, four is unthinkable. Literally

Let’s try to find out what happens if we calculate the average entropy of a random 4-dimensional multinoulli variable. This is something we usually cannot even imagine, however it’s quite feasible due to maths. The recipe is the same: integrate and divide by the volume of the domain.

First, we’ll have to add one more variable to the calculation of entropy:

Then, we calculate the volume of the domain. We are now in the 3D so we have planes instead of lines. The domain is a pyramid between these 4 planes:

and its volume is . (This is a good exercise for the reader btw)

And now for the integral…

WolframAlpha doesn’t really help anymore.

But some other symbollic computing tools come to rescue, namely Sympy

But some other symbollic computing tools come to rescue, namely Sympy

>>> from sympy import symbols, integrate, ln, simplify

>>> p, q, w = symbols('p q w', real=True)

>>> E = -p*ln(p)-q*ln(q)-w*ln(w)-(1-p-q-w)*ln(1-p-q-w)

>>> domain_volume = 1/6

>>> res = integrate(f, (w,0,1-p-q), (q,0,1-p), (p,0,1))

>>> # seven hungry years later

>>> print(result.real / domain_volume)

13/12

The result is .5 No obvious pattern yet.

And also, these integral solving functions take progressively more and more time. Running it for 2-dimensional case took ~4sec, for 3-dimensional case took ~20 sec, and for 4-dimensional case it took a whooping 163 sec to get an anwser. If we assume it’s a factorial growth, we’re looking forward to ~10 minutes for 5-dimensional case and ~2h for 6 dimensions.

I could, in theory, buy a better laptop but it won’t help me much. Therefore, we’ll need to look for other methods that trade computation speed for absolute precision. See you in the next part.

-

While this is not entirely true, I’ll leave it at that for brevity’s sake ↩

-

is a so-called natural logarithm, logarithm with a base .3 ↩

-

Usually, the entropy is defined using and not . However, using base e will be beneficial for the end result

. The only difference anyways is just the scaling factor of .

The volume of the domain is the easier part: it is now ↩

. The only difference anyways is just the scaling factor of .

The volume of the domain is the easier part: it is now ↩ -

It is a general pattern that the entropy of an -dimensional variable is a function of variables. This stems from the fact that ; you always need one variable less because you can calculate it from all the others. ↩

-

An astute reader might be wondering why is there a

.realattribute acess. The truth is - I don’t really know haha while solving these integrals, Sympy would always return some dubious complex numbers, but the result I needed was always the real part. I’m not sure why this keeps happening, bit it seems to be working ↩

↩

Back to main page